from IPython.display import HTML

HTML("""

<iframe

src="https://mrdbourke-trashify-demo-v4.hf.space"

frameborder="0"

width="850"

height="1150"

></iframe>

""")Object Detection with Hugging Face Transformers Tutorial

Note: If you’re running in Google Colab, make sure to enable GPU usage by going to Runtime -> Change runtime type -> select GPU.

Source code on GitHub | Online book version | Setup guide | Video Course (coming soon)

1 Overview

Welcome to the Learn Hugging Face Object Detection project!

Inside this project, we’ll learn bits and pieces about the Hugging Face ecosystem as well as how to build our own custom object detection model.

We’ll start with a collection of images with bounding box files as our dataset, fine-tune an existing computer vision model to detect items in an image and then share our model as a demo others can use.

Feel to keep reading through the notebook but if you’d like to run the code yourself, be sure to go through the setup guide first.

1.1 What we’re going to build

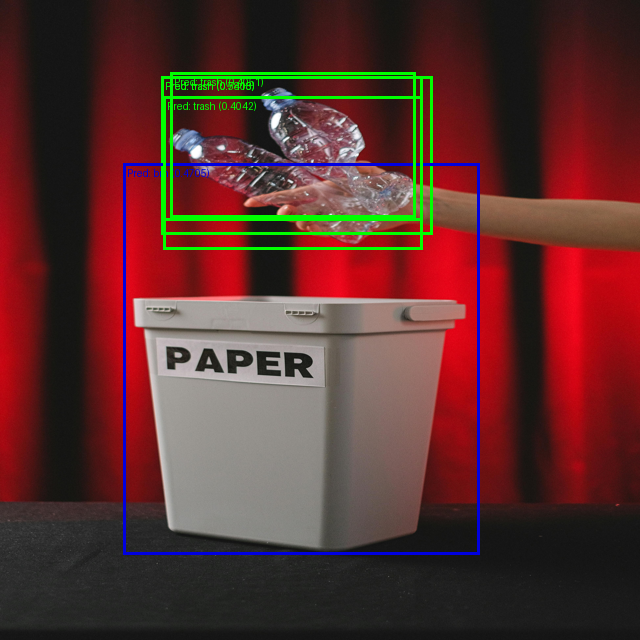

We’re going to be bulding Trashify 🚮, an object detection model which incentivises people to pick up trash in their local area by detecting bin, trash, hand.

If all three items are detected, a person gets +1 point!

For example, say you were going for a walk around your neighbourhood and took a photo of yourself picking up a piece (with your hand or trash arm) of trash and putting it in the bin, you would get a point.

With this object detection model, you could deploy it to an application which would automatically detect the target classes and then save the result to an online leaderboard.

The incentive would be to score the most points, in turn, picking up the most piecces of trash, in a given area.

More specifically, we’re going to follow the following steps:

- Data: Problem defintion and dataset preparation - Getting a dataset/setting up the problem space.

- Model: Finding, training and evaluating a model - Finding an object detection model suitable for our problem on Hugging Face and customizing it to our own dataset.

- Demo: Creating a demo and put our model into the real world - Sharing our trained model in a way others can access and use.

By the end of this project, you’ll have a trained model and demo on Hugging Face you can share with others:

1.2 What is object detection?

Object detection is the process of identifying and locating an item in an image.

Where item can mean almost anything.

For example:

- Detecting car licence plates in a video feed (videos are a series of images) for a parking lot entrance.

- Detecting delivery people walking towards your front door on a security camera.

- Detecting defects on a manufacturing line.

- Detecting pot holes in the road so repair works can automatically be scheduled.

- Detecting small pests (Varroa Mite) on the bodies of bees.

- Detecting weeds in a field so you know what to remove and what to keep.

And for some trash identification examples:

- Google open-sourcing CircularNet for helping to detect and identify different kinds of trash in waste management facilities.

- A machine learning paper for using a computer vision model on a Raspberry Pi (a small computer) for waste identification.

- Ameru is a company building a trash-identifying bin to sort automatically sort trash as people put it in, see the case study of hose they created their own custom dataset using Label Studio.

Note: Object detection is also sometimes referred to as image localization or object localization. For consistency, I will use the term object detection, however, either of these terms could substitute.

Image classification deals with classifying an image as a whole into a single class, object detection endeavours to find the specific target item and where it is in an image.

One of the most common ways of showing where an item is in an image is by displaying a bounding box (a rectangle-like box around the target item).

An object detection model will often take an input image tensor in the shape [3, 640, 640] ([colour_channels, height, width]) and output a tensor in the form [class_name, x_min, y_min, x_max, y_max] or [class_name, x1, y1, x2, y2] (this is two ways to write the same example format, there are more formats, we’ll see these below in Table 1).

Where:

class_name= The classification of the target item (e.g."car","person","banana","piece_of_trash", this could be almost anything).x_min= Thexvalue of the top left corner of the box.y_min= Theyvalue of the top left corner of the box.x_max= Thexvalue of the bottom right corner of the box.y_max= Theyvalue of the bottom right corner of the box.

When you get into the world of object detection, you will find that there are several different bounding box formats.

There are three major formats you should be familiar with: XYXY, XYWH, CXCYWH (there are more but these are the most common).

Knowing which bounding box format you’re working with can be the difference between a good model and a very poor model (wrong bounding boxes = wrong outcome).

We’ll get hands-on with a couple of these in this project.

But for an in-depth example of all three, I created a guide on different bounding box formats and how to draw them, reading this should give a good intuition behind each style of bounding box.

1.3 Why train your own object detection models?

You can customize pre-trained models for object detection as well as API-powered models and LLMs such as Gemini, LandingAI and DINO-X.

Depending on your requirements, there are several pros and cons for using your own model versus using an API.

Training/fine-tuning your own model:

| Pros | Cons |

|---|---|

| Control: Full control over model lifecycle. | Can be complex to get setup. |

| No usage limits (aside from compute constraints). | Requires dedicated compute resources for training/inference. |

| Can train once and deploy everywhere/whenever you want (for example, Tesla deploying a model to all self-driving cars). | Requires maintenance over time to ensure performance remains up to par. |

| Privacy: Data can be kept in-house/app and doesn’t need to go to a third party. | Can require longer development cycles compared to using existing APIs. |

| Speed: Customizing a small model for a specific use case often means it runs much faster on local hardware, for example, modern object detection models can achieve 70-100+ FPS (frames per second) on modern GPU hardware. |

Using a pre-built model API:

| Pros | Cons |

|---|---|

| Ease of use: often can be setup within a few lines of code. | If the model API goes down, your service goes down. |

| No maintenance of compute resources. | Data is required to be sent to a third-party for processing. |

| Access to the most advanced models. | The API may have usage limits per day/time period. |

| Can scale if usage increases. | Can be much slower than using dedicated models due to requiring an API call. |

For this project, we’re going to focus on fine-tuning our own model.

1.4 Workflow we’re going to follow

The good news for us is that the Hugging Face ecosystem makes working on custom machine learning projects an absolute blast.

And workflow is reproducible across several kinds of projects.

Start with data (or skip this step and go straight to a model) -> get/customize a model -> build and share a demo.

With this in mind, our motto is data, model, demo!

More specifically, we’re going to follow the rough workflow of:

- Create, preprocess and load data using Hugging Face Datasets.

- Define the model we’d like use with

transformers.AutoModelForObjectDetection(or another similar model class). - Define training arguments (these are hyperparameters for our model) with

transformers.TrainingArguments. - Pass

TrainingArgumentsfrom 3 and target datasets to an instance oftransformers.Trainer. - Train the model by calling

Trainer.train(). - Save the model (to our local machine or to the Hugging Face Hub).

- Evaluate the trained model by making and inspecting predctions on the test data.

- Turn the model into a shareable demo.

I say rough because machine learning projects are often non-linear in nature.

As in, because machine learning projects involve many experiments, they can kind of be all over the place.

But this worfklow will give us some good guidelines to follow.

2 Importing necessary libraries

Let’s get started!

First, we’ll import the required libraries.

If you’re running on your local computer, be sure to check out the getting setup guide to make sure you have everything you need.

If you’re using Google Colab, many of them the following libraries will be installed by default.

However, we’ll have to install a few extras to get everything working.

If you’re running on Google Colab, this notebook will work best with access to a GPU. To enable a GPU, go to Runtime ➡️ Change runtime type ➡️ Hardware accelerator ➡️ GPU.

We’ll need to install the following libraries from the Hugging Face ecosystem:

transformers- comes pre-installed on Google Colab but if you’re running on your local machine, you can install it viapip install transformers.datasets- a library for accessing and manipulating datasets on and off the Hugging Face Hub, you can install it viapip install datasets.gradio- a library for creating interactive demos of machine learning models, you can install it viapip install gradio.- Optional:

evaluate- a library for evaluating machine learning model performance with various metrics, you can install it viapip install evaluate. - Optional:

accelerate- a library for training machine learning models faster, you can install it viapip install accelerate.

And the following library is not part of the Hugging Face ecosystem but it is helpful for evaluating our models:

torchmetrics- a library containing many evaluation metrics compatible with PyTorch/Transformers, you can install it viapip install torchmetrics.

We can also check the versions of our software with package_name.__version__.

# Install/import dependencies (this is mostly for Google Colab, as the other dependences are available by default in Colab)

try:

import datasets

import gradio as gr

import torchmetrics

import pycocotools

except ModuleNotFoundError:

# If a module isn't found, install it

!pip install -U datasets gradio # -U stands for "upgrade" so we'll get the latest version by default

!pip install -U torchmetrics[detection]

import datasets

import gradio as gr

# Required for evalation

import torchmetrics

import pycocotools # make sure we have this for torchmetrics

import random

import numpy as np

import torch

import transformers

# Check versions (as long as you've got the following versions or higher, you should be good)

print(f"Using transformers version: {transformers.__version__}")

print(f"Using datasets version: {datasets.__version__}")

print(f"Using torch version: {torch.__version__}")

print(f"Using torchmetrics version: {torchmetrics.__version__}")Using transformers version: 4.53.0

Using datasets version: 3.6.0

Using torch version: 2.7.0+cu126

Using torchmetrics version: 1.7.1Wonderful, as long as your versions are the same or higher to the versions above, you should be able to run the code below.

3 Getting a dataset

Okay, now we’re got the required libraries, let’s get a dataset.

Getting a dataset is one of the most important things a machine learning project.

The dataset you often determines the type of model you use as well as the quality of the outputs of that model.

Meaning, if you have a high quality dataset, chances are, your future model could also have high quality outputs.

It also means if your dataset is of poor quality, your model will likely also have poor quality outputs.

For an object detection problem, your dataset will likely come in the form of a group of images as well as a file with annotations belonging to those images.

For example, you might have the following setup:

folder_of_images/

image_1.jpeg

image_2.jpeg

image_3.jpeg

annotations.jsonWhere the annotations.json contains details about the contains of each image:

annotations.json

[

{

'image_path': 'image_1.jpeg',

'image_id': 42,

'annotations':

{

'file_name': ['image_1.jpeg'],

'image_id': [42],

'category_id': [1],

'bbox': [

[360.20001220703125, 528.5, 177.1999969482422, 261.79998779296875],

],

'area': [46390.9609375]

},

'label_source': 'manual_prodigy_label',

'image_source': 'manual_taken_photo'

},

...(more labels down here)

]Don’t worry too much about the exact meaning of everything in the above annotations.json file for now (this is only one example, there are many different ways object detection information could be displayed).

The main point is that each target image is paired with an assosciated label.

Now like all good machine learning cooking shows, I’ve prepared a dataset from earlier.

It’s stored on Hugging Face Datasets (also called the Hugging Face Hub) under the name mrdbourke/trashify_manual_labelled_images.

This is a dataset I’ve collected manually by hand (yes, by picking up 1000+ pieces of trash and photographing it) as well as labelled by hand (by drawing boxes on each image with a labelling tool called Prodigy).

3.1 Loading the dataset

To load a dataset stored on the Hugging Face Hub we can use the datasets.load_dataset(path=NAME_OR_PATH_OF_DATASET) function and pass it the name/path of the dataset we want to load.

In our case, our dataset name is mrdbourke/trashify_manual_labelled_images (you can also change this for your own dataset).

And since our dataset is hosted on Hugging Face, when we run the following code for the first time, it will download it.

If your target dataset is quite large, this download may take a while.

However, once the dataset is downloaded, subsequent reloads will be mush faster.

One way to find out what a function or method does is to lookup the documentation.

Another way is to write the function/method name with a question mark afterwards.

For example:

from datasets import load_dataset

load_dataset?Give it a try.

You should see some helpful information about what inputs the method takes and how they are used.

Let’s load our dataset and check it out.

from datasets import load_dataset

# Load our Trashify dataset

dataset = load_dataset(path="mrdbourke/trashify_manual_labelled_images")

datasetDatasetDict({

train: Dataset({

features: ['image', 'image_id', 'annotations', 'label_source', 'image_source'],

num_rows: 1128

})

})Beautiful!

We can see that there is a train split of the dataset already which currently contains all of the samples (1128 in total).

There are also some features that come with our dataset which are related to our object detection goal.

print(f"[INFO] Length of original dataset: {len(dataset['train'])}")

print(f"[INFO] Dataset features:")

from pprint import pprint

pprint(dataset['train'].features)[INFO] Length of original dataset: 1128

[INFO] Dataset features:

{'annotations': Sequence(feature={'area': Value(dtype='float32', id=None),

'bbox': Sequence(feature=Value(dtype='float32',

id=None),

length=4,

id=None),

'category_id': ClassLabel(names=['bin',

'hand',

'not_bin',

'not_hand',

'not_trash',

'trash',

'trash_arm'],

id=None),

'file_name': Value(dtype='string', id=None),

'image_id': Value(dtype='int64', id=None),

'iscrowd': Value(dtype='int64', id=None)},

length=-1,

id=None),

'image': Image(mode=None, decode=True, id=None),

'image_id': Value(dtype='int64', id=None),

'image_source': Value(dtype='string', id=None),

'label_source': Value(dtype='string', id=None)}Nice!

We can see our dataset features contain the following fields:

annotations- A sequence of values including abboxfield (short for bounding box) as well ascategory_idfield which contains the target objects we’d like to identify in our images (['bin', 'hand', 'not_bin', 'not_hand', 'not_trash', 'trash', 'trash_arm']).image- This contains the target image assosciated with a given set ofannotations(in our case, images and annotations have been uploaded to the Hugging Face Hub together).image_id- A unique ID assigned to a given sample.image_source- Where the image came from (all of our images have been manually collected).label_source- Where the image label came from (all of our images have been manually labelled).

3.2 Viewing a single sample from our data

Now we’ve seen the features, let’s check out a single sample from our dataset.

We can index on a single sample of the "train" set just like indexing on a Python list.

# View a single sample of the dataset

dataset["train"][42]{'image': <PIL.Image.Image image mode=RGB size=960x1280>,

'image_id': 745,

'annotations': {'file_name': ['094f4f41-dc07-4704-96d7-8d5e82c9edb9.jpeg',

'094f4f41-dc07-4704-96d7-8d5e82c9edb9.jpeg',

'094f4f41-dc07-4704-96d7-8d5e82c9edb9.jpeg'],

'image_id': [745, 745, 745],

'category_id': [5, 1, 0],

'bbox': [[333.1000061035156,

611.2000122070312,

244.89999389648438,

321.29998779296875],

[504.0, 612.9000244140625, 451.29998779296875, 650.7999877929688],

[202.8000030517578,

366.20001220703125,

532.9000244140625,

555.4000244140625]],

'iscrowd': [0, 0, 0],

'area': [78686.3671875, 293706.03125, 295972.65625]},

'label_source': 'manual_prodigy_label',

'image_source': 'manual_taken_photo'}We see a few more details here compared to just looking at the features.

We notice the image is a PIL.Image with size 960x1280 (width x height).

And the file_name is a UUID (Universially Unique Identifier, made with uuid.uuid4()).

The bbox field in the annotations key contains a list of bounding boxes assosciated with the image.

In this case, there are 3 different bounding boxes.

With the category_id values of 5, 1, 0 (we’ll map these to class names shortly).

Let’s inspect a single bounding box.

dataset["train"][42]["annotations"]["bbox"][0][333.1000061035156, 611.2000122070312, 244.89999389648438, 321.29998779296875]This array gives us the coordinates of a single bounding box in the format XYWH.

Where:

Xis the x-coordinate of the top left corner of the box (333.1).Yis the y-coordinate of the top left corner of the box (611.2).Wis the width of the box (244.9).His the height of the box (321.3).

All of these values are in absolute pixel values (meaning an x-coordinate of 333.1 is 333.1 pixels across on the x-axis).

How do I know this?

I know this because I created the box labels and this is the default value Prodigy (the labelling tool I used) outputs boxes.

However, if you were to come across another bouding box dataset, one of the first steps would be to figure out what format your bounding boxes are in.

We’ll see more on bounding box formats shortly.

3.3 Extracting the category names from our data

Before we start to visualize our sample image and bounding boxes, let’s extract the category names from our dataset.

We can do so by accessing the features attribute our of dataset and then following it through to find the category_id feature, this contains a list of our text-based class names.

When working with different categories, it’s good practice to get a list or mapping (e.g. a Python dictionary) from category name to ID and vice versa.

For example:

# Category to ID

{"class_name": 0}

# ID to Category

{0: "class_name"}Not all datasets will have this implemented in an easy to access way, so it might take a bit of research to get it created.

Let’s access the class names in our dataset and save them to a variable categories.

# Get the categories from the dataset

# Note: This requires the dataset to have been uploaded with this information setup, not all datasets will have this available.

categories = dataset["train"].features["annotations"]["category_id"]

# Get the names attribute

categories.feature.names['bin', 'hand', 'not_bin', 'not_hand', 'not_trash', 'trash', 'trash_arm']Beautiful!

We get the following class names:

bin- A rubbish bin or trash can.hand- A person’s hand.not_bin- Negative version ofbinfor items that look like abinbut shouldn’t be identified as one.not_hand- Negative version ofhandfor items that look like ahandbut shouldn’t be identified as one.not_trash- Negative version oftrashfor items that look liketrashbut shouldn’t be identified as it.trash- An item of trash you might find on a walk such as an old plastic bottle, food wrapper, cigarette butt or used coffee cup.trash_arm- A mechanical arm used for picking up trash.

The goal of our computer vision model will be: given an image, detect items belonging to these target classes if they are present.

3.4 Creating a mapping from numbers to labels

Now we’ve got our text-based class names, let’s create a mapping from label to ID and ID to label.

For each of these, Hugging Face use the terminology label2id and id2label respectively.

# Map ID's to class names and vice versa

id2label = {i: class_name for i, class_name in enumerate(categories.feature.names)}

label2id = {value: key for key, value in id2label.items()}

print(f"Label to ID mapping:\n{label2id}\n")

print(f"ID to label mapping:\n{id2label}")

# id2label, label2idLabel to ID mapping:

{'bin': 0, 'hand': 1, 'not_bin': 2, 'not_hand': 3, 'not_trash': 4, 'trash': 5, 'trash_arm': 6}

ID to label mapping:

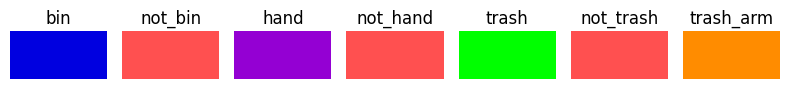

{0: 'bin', 1: 'hand', 2: 'not_bin', 3: 'not_hand', 4: 'not_trash', 5: 'trash', 6: 'trash_arm'}3.5 Creating a colour palette

Ok we know which class name matches to which ID, now let’s create a dictionary of different colours we can use to display our bounding boxes.

It’s one thing to plot bounding boxes, it’s another thing to make them look nice.

And we always want our plots looking nice!

We’ll colour the positive classes bin, hand, trash, trash_arm in nice bright colours.

And the negative classes not_bin, not_hand, not_trash in a light red colour to indicate they’re the negative versions.

Our colour dictionary will map class_name -> (red, green, blue) (or RGB) colour values.

# Make colour dictionary

colour_palette = {

'bin': (0, 0, 224), # Bright Blue (High contrast with greenery) in format (red, green, blue)

'not_bin': (255, 80, 80), # Light Red to indicate negative class

'hand': (148, 0, 211), # Dark Purple (Contrasts well with skin tones)

'not_hand': (255, 80, 80), # Light Red to indicate negative class

'trash': (0, 255, 0), # Bright Green (For trash-related items)

'not_trash': (255, 80, 80), # Light Red to indicate negative class

'trash_arm': (255, 140, 0), # Deep Orange (Highly visible)

}Let’s check out what these colours look like!

It’s the ABV motto: Always Be Visualizing!

We can plot our colours with matplotlib.

We’ll just have to write a small function to normalize our colour values from [0, 255] to [0, 1] (matplotlib expects our colour values to be between 0 and 1).

import matplotlib.pyplot as plt

import numpy as np

# Normalize RGB values to 0-1 range

def normalize_rgb(rgb_tuple):

return tuple(x/255 for x in rgb_tuple)

# Turn colors into normalized RGB values for matplotlib

colors_and_labels_rgb = [(key, normalize_rgb(value)) for key, value in colour_palette.items()]

# Create figure and axis

fig, ax = plt.subplots(1, 7, figsize=(8, 1))

# Flatten the axis array for easier iteration

ax = ax.flatten()

# Plot each color square

for idx, (label, color) in enumerate(colors_and_labels_rgb):

ax[idx].add_patch(plt.Rectangle(xy=(0, 0),

width=1,

height=1,

facecolor=color))

ax[idx].set_title(label)

ax[idx].set_xlim(0, 1)

ax[idx].set_ylim(0, 1)

ax[idx].axis('off')

plt.tight_layout()

plt.show()

Sensational!

Now we know what colours to look out for when we visualize our bounding boxes.

4 Plotting a single image and visualizing the boxes

Okay, okay, finally time to plot an image!

Let’s take a random sample from our dataset and plot the image as well as the box on it.

4.1 Creating helper functions to help with visualization

To save some space in our notebook (plotting many images can increase the size of our notebook dramatically), we’ll create two small helper functions:

half_image- Halves the size of a given image.half_boxes- Divides the input coordinates of a given input box by 2.

These functions aren’t 100% necessary in our workflow.

They’re just to make the images slightly smaller so they fit better in the notebook.

import PIL

def half_image(image: PIL.Image) -> PIL.Image:

"""

Resizes a given input image by half and returns the smaller version.

"""

return image.resize(size=(image.size[0] // 2, image.size[1] // 2))

def half_boxes(boxes):

"""

Halves an array/tensor of input boxes and returns them. Necessary for plotting them on a half-sized image.

For example:

boxes = [100, 100, 100, 100]

half_boxes = half_boxes(boxes)

print(half_boxes)

>>> [50, 50, 50, 50]

"""

if isinstance(boxes, list):

# If boxes are list of lists, then we have multiple boxes

for box in boxes:

if isinstance(box, list):

return [[coordinate // 2 for coordinate in box] for box in boxes]

else:

return [coordinate // 2 for coordinate in boxes]

if isinstance(boxes, np.ndarray):

return (boxes // 2)

if isinstance(boxes, torch.Tensor):

return (boxes // 2)

# Test the functions

image_test = dataset["train"][42]["image"]

image_test_half = half_image(image_test)

print(f"[INFO] Original image size: {image_test.size} | Half image size: {image_test_half.size}")

boxes_test_list = [100, 100, 100, 100]

print(f"[INFO] Original boxes: {boxes_test_list} | Half boxes: {half_boxes(boxes_test_list)}")

boxes_test_torch = torch.tensor([100.0, 100.0, 100.0, 100.0])

print(f"[INFO] Original boxes: {boxes_test_torch} | Half boxes: {half_boxes(boxes_test_torch)}")[INFO] Original image size: (960, 1280) | Half image size: (480, 640)

[INFO] Original boxes: [100, 100, 100, 100] | Half boxes: [50, 50, 50, 50]

[INFO] Original boxes: tensor([100., 100., 100., 100.]) | Half boxes: tensor([50., 50., 50., 50.])4.2 Plotting boxes on a single image step by step

To plot an image and its assosciated boxes, we’ll do the following steps:

- Select a random sample from the

dataset. - Extract the

"image"(our image is inPILformat) and"bbox"keys from the random sample.- We can also optionally halve the size of our image/boxes to save space. In our case, we will halve our image and boxes.

- Turn the box coordinates into a

torch.tensor(we’ll be usingtorchvisionutilities to plot the image and boxes). - Convert the box format from

XYXYtoXYWHusingtorchvision.ops.box_convert(we do this becausetorchvision.utils.draw_bounding_boxesrequiresXYXYformat as input). - Get a list of label names (e.g.

"bin", "trash", etc) assosciated with each of the boxes as well as a list of colours to match (these will be from ourcolour_palette). - Draw the boxes on the target image by:

- Turning the image into a tensor with

torchvision.transforms.functional.pil_to_tensor. - Draw the bounding boxes on our image tensor with

torchvision.utils.draw_bounding_boxes. - Turn the image and bounding box tensors back into a

PILimage withtorchvision.transforms.functional.to_pil_image.

- Turning the image into a tensor with

Phew!

A fair few steps…

But we’ve got this!

If the terms XYXY or XYWH or all of the drawing methods sound a bit confusing or intimidating, don’t worry, there’s a fair bit going on here.

We’ll cover bounding box formats, such as XYXY shortly.

In the meantime, if you want to learn more about different bounding box formats and how to draw them, I wrote A Guide to Bounding Box Formats and How to Draw Them which you might find helpful.

# Plotting a bounding box on a single image

import random

import torch

from torchvision.ops import box_convert

from torchvision.utils import draw_bounding_boxes

from torchvision.transforms.functional import pil_to_tensor, to_pil_image

# 1. Select a random sample from our dataset

random_index = random.randint(0, len(dataset["train"]))

print(f"[INFO] Showing training sample from index: {random_index}")

random_sample = dataset["train"][random_index]

# 2. Get image and boxes from random sample

random_sample_image = random_sample["image"]

random_sample_boxes = random_sample["annotations"]["bbox"]

# Optional: Half the image and boxes for space saving (all of the following code will work with/without half size images)

half_random_sample_image = half_image(random_sample_image)

half_random_sample_boxes = half_boxes(random_sample_boxes)

# 3. Turn box coordinates in a tensor

boxes_xywh = torch.tensor(half_random_sample_boxes)

print(f"Boxes in XYWH format: {boxes_xywh}")

# 4. Convert boxes from XYWH -> XYXY

# torchvision.utils.draw_bounding_boxes requires input boxes in XYXY format (X_min, y_min, X_max, y_max)

boxes_xyxy = box_convert(boxes=boxes_xywh,

in_fmt="xywh",

out_fmt="xyxy")

print(f"Boxes XYXY: {boxes_xyxy}")

# 5. Get label names of target boxes and colours to match

random_sample_label_names = [categories.int2str(x) for x in random_sample["annotations"]["category_id"]]

random_sample_colours = [colour_palette[label_name] for label_name in random_sample_label_names]

print(f"Label names: {random_sample_label_names}")

print(f"Colour codes: {random_sample_colours}")

# 6. Draw the boxes on the image as a tensor and then turn it into a PIL image

to_pil_image(

pic=draw_bounding_boxes(

image=pil_to_tensor(pic=half_random_sample_image),

boxes=boxes_xyxy,

colors=random_sample_colours,

labels=random_sample_label_names,

width=2,

label_colors=random_sample_colours

)

)[INFO] Showing training sample from index: 264

Boxes in XYWH format: tensor([[ 64., 256., 308., 304.],

[267., 344., 92., 121.],

[149., 256., 175., 186.]])

Boxes XYXY: tensor([[ 64., 256., 372., 560.],

[267., 344., 359., 465.],

[149., 256., 324., 442.]])

Label names: ['bin', 'hand', 'trash']

Colour codes: [(0, 0, 224), (148, 0, 211), (0, 255, 0)]

Outstanding!

Our first official bounding boxes plotted on an image!

Now the idea of Trashify 🚮 is coming to life.

Depending on the random sample you’re looking at, you should see some combination of ['bin', 'hand', 'not_bin', 'not_hand', 'not_trash', 'trash', 'trash_arm'].

Our goal will be to build an object detection model to replicate these boxes on a given image.

Whenever working with a new dataset, I find it good practice to view 100+ random samples of the data.

In our case, this would mean viewing 100 random images with their bounding boxes drawn on them.

Doing so starts to build your own intuition of the data.

Using this intuition, along with evaluation metrics, you can start to get a better idea of how your model might be performing later on.

Keep this in mind for any new dataset or problem space you’re working on.

Start by looking at 100+ random samples.

And yes, generally more is better.

So you can practice by running the code cell above a number of times to see the different kinds of images and boxes in the dataset.

Can you think of any scenarios which the dataset might be missing?

5 Different bounding box formats

When drawing our bounding box, we discussed the terms XYXY and XYWH.

Well, we didn’t really discuss these at all…

But that’s why we’re here.

One of the most confusing things in the world of object detection is the different formats bounding boxes come in.

Are your boxes in XYXY, XYWH or CXCYWH?

Are they in absolute format?

Or normalized format?

Perhaps a table will help us.

The following table contains a non-exhaustive list of some of the most common bounding box formats you’ll come across in the wild.

| Box format | Description | Absolute Example | Normalized Example | Source |

|---|---|---|---|---|

| XYXY | Describes the top left corner coordinates (x1, y1) as well as the bottom right corner coordinates of a box. Also referred to as: [x1, y1, x2, y2] or [x_min, y_min, x_max, y_max] |

[8.9, 275.3, 867.5, 964.0] |

[0.009, 0.215, 0.904, 0.753] |

PASCAL VOC Dataset uses the absolute version of this format, torchvision.utils.draw_bounding_boxes defaults to the absolute version of this format. |

| XYWH | Describes the top left corner coordinates (x1, y1) as well as the width (box_width) and height (box_height) of the target box. The bottom right corners (x2, y2) are found by adding the width and height to the top left corner coordinates (x1 + box_width, y1 + box_height). Also referred to as: [x1, y1, box_width, box_height] or [x_min, y_min, box_width, box_height] |

[8.9, 275.3, 858.6, 688.7] |

[0.009, 0.215, 0.894, 0.538] |

The COCO (Common Objects in Context) dataset uses the absolute version of this format, see the section under “bbox”. |

| CXCYWH | Describes the center coordinates of the bounding box (center_x, center_y) as well as the width (box_width) and height (box_height) of the target box. Also referred to as: [center_x, center_y, box_width, box_height] |

[438.2, 619.65, 858.6, 688.7] |

[0.456, 0.484, 0.894, 0.538] |

Normalized version introduced in the YOLOv3 (You Only Look Once) paper and is used by many later forms of YOLO. |

5.1 Absolute or normalized format?

In absolute coordinate form, bounding box values are in the same format as the width and height dimensions (e.g. our image is 960x1280 pixels).

For example in XYXY format: ["bin", 8.9, 275.3, 867.5, 964.0]

An (x1, y1) (or (x_min, y_min)) coordinate of (8.9, 275.3) means the top left corner is 8.9 pixels in on the x-axis, and 275.3 pixels down on the y-axis.

In normalized coordinate form, values are between [0, 1] and are proportions of the image width and height.

For example in XYXY format: ["bin", 0.009, 0.215, 0.904, 0.753]

A normalized (x1, y1) (or (x_min, y_min)) coordinate of (0.009, 0.215) means the top left corner is 0.009 * image_width pixels in on the x-axis and 0.215 * image_height down on the y-axis.

To convert absolute coordinates to normalized, you can divide x-axis values by the image width and y-axis values by the image height.

\[ x_{\text{normalized}} = \frac{x_{\text{absolute}}}{\text{image\_width}} \quad y_{\text{normalized}} = \frac{y_{\text{absolute}}}{\text{image\_height}} \]

5.2 Which bounding box format should you use?

The bounding box format you use will depend on the framework, model and existing data you’re trying to use.

For example, the take the following frameworks:

- PyTorch - If you’re using PyTorch pre-trained models, for example,

torchvision.models.detection.fasterrcnn_resnet50_fpn, you’ll want absoluteXYXY([x1, y1, x2, y2]) format. - Hugging Face Transformers - If you’re using a Hugging Face Transformers model such as RT-DETRv2, you’ll want to take note that outputs from the model can be of one type (e.g.

CXCYWH) but they can be post-processed into another type (e.g. absoluteXYXY). - Ultralytics YOLO - If you’re using a YOLO-like model such as Ultralytics YOLO, you’ll want normalized

CXCYWH([center_x, center_y, width, height]) format. - Google Gemini - If you’re using Google Gemini to predict bounding boxes on your images, then you’ll want to pay attention to the special

[y_min, x_min, y_max, x_max](YXYX) normalized coordinates.

Or if you note that someone has said their model is pre-trained on the COCO dataset, chances are the data has been formatted in XYWH format (see Table 1).

For more on different bounding box formats and how to draw them, see A Guide to Bounding Box Formats and How to Draw Them.

6 Getting an object detection model

There are two main ways of getting an object detection model:

- Building it yourself. For example, constructing it layer by layer, testing it and training it on your target problem.

- Using an existing one. For example, find an existing model on a problem space similar to your own and then adapt it via transfer learning to your own task.

In our case, we’re going to focus on the latter.

We’ll be taking a pre-trained object detection model and fine-tuning it on our Trashify 🚮 dataset so it outputs the boxes and labels we’re after.

6.1 Places to get object detection models

Instead of building your own machine learning model from scratch, it’s common practice to take an existing model that works on similar problem space to yours and then fine-tune it to your own use case.

There are several places to get object detection models:

| Location | Description |

|---|---|

| Hugging Face Hub | One the best places on the internet to find open-source machine learning models of nearly any kind. You can find pre-trained object detection models here such as facebook/detr-resnet-50, a model from Facebook (Meta) and microsoft/conditional-detr-resnet-50, a model from Microsoft. And RT-DETRv2, the model we’re going to use as our base model. Many of the models are permissively licensed, meaning you can use them for your own projects. |

| Apache 2.0 Object Detection Models | A list collected by myself of open-source, permissively licenced and high performing object detection models. |

torchvision |

PyTorch’s built-in domain library for computer vision has several pre-trained object detection models which you can use in your own workflows. |

| paperswithcode.com/task/object-detection | Whilst not a direct place to download object detection models from, paperswithcode contains benchmarks for many machine learning tasks (including object detection) which shows the current state of the art (best performing) models and usually includes links to where to get the code. |

| Detectron2 | Detectron2 is an open-source library to help with many of the tasks in detecting items in images. Inside you’ll find several pre-trained and adaptable models as well as utilities such as data loaders for object detection and segmentation tasks. |

| YOLO Series | A running series of “You Only Look Once” models. Usually, the higher the number, the better performing. For example, YOLOv11 by Ultralytics should outperform YOLOv10, however, this often requires testing on your own dataset. Beware of the license, it is under the AGPL-3.0 license which may cause issues in some organizations. |

mmdetection library |

An open-source library from the OpenMMLab which contains many different open-source models as well as detection-specific utilties. |

When you find a pre-trained object detection model, you’ll often see statements such as:

Conditional DEtection TRansformer (DETR) model trained end-to-end on COCO 2017 object detection (118k annotated images).

Source: https://huggingface.co/microsoft/conditional-detr-resnet-50

This means the model has already been trained on the COCO object detection dataset which contains 118,000 images and 80 classes such as ["cake", "person", "skateboard"...].

This is a good thing.

It means that the model should have a fairly good starting point when we try to adapt it to our own project.

6.2 Downloading our model from Hugging Face

For our Trashify 🚮 project we’re going to be using the pre-trained object detection model PekingU/rtdetr_v2_r50vd which was originally introduced in the paper RT-DETRv2: Improved Baseline with Bag-of-Freebies for Real-Time Detection Transformer.

The term “DETR” stands for “DEtection TRansformer”.

Where “Transformer” refers to the Transformer neural network architecture, specifically the Vision Transformer (or ViT) rather than the Hugging Face transformers library (quite confusing, yes).

So DETR means “performing detection with the Transformer architecture”.

And the “RT” part stands for “Real Time” as in, the model is capable at performing predictions at over 30 FPS (a standard rate for video feeds).

To use this model, there are some helpful documentation resources we should be aware of:

| Resource | Description |

|---|---|

| RT-DETRv2 documentation | Contains detailed information on each of the transformers.RTDetrV2 classes. |

transformers.RTDetrV2Config |

Contains the configuration settings for our model such as number of layers and other hyperparameters. |

transformers.RTDetrImageProcessor |

Contains several preprocessing on post processing functions and settings for data going into and out of our model. Here we can set values such as size in the preprocess method which will resize our images to a certain size. We can also use the post_process_object_detection method to process the raw outputs of our model into a more usable format. Note: Even though our model is RT-DETRv2, it uses the original RT-DETR processor. |

transformers.RTDetrV2ForObjectDetection |

This will enable us to load the RT-DETRv2 model weights and enable to pass data through them via the forward method. |

transformers.AutoImageProcessor |

This will enable us to create an instance of transformers.RTDetrImageProcessor by passing the model name PekingU/rtdetr_v2_r50vd to the from_pretrained method. Hugging Face Transformers uses several Auto Classes for various problem spaces and models. |

transformers.AutoModelForObjectDetection |

Enables us to load the model architecture and weights for the RT-DETRv2 architecture by passing the model name PekingU/rtdetr_v2_r50vd to the from_pretrained method. |

We’ll get hands-on which each of these throughout the project.

For now, if you’d like to read up more on each, I’d highly recommend it.

Knowing how to navigate and read through a framework’s documentation is a very helpful skill to have.

There are other object detection models we could try on the Hugging Face Hub such as facebook/detr-resnet-50 or IDEA-Research/dab-detr-resnet-50-dc5-pat3.

For now, we’ll stick with PekingU/rtdetr_v2_r50vd.

It’s easy to get stuck figuring out which model to use instead of just trying one and seeing how it goes.

Best to get something small working with one model and try another one later as part of a series of experiments to try and improve your results.

Spoiler: After trying several different object detection models on our problem, I found PekingU/rtdetr_v2_r50vd to be one of the best. Perhaps the newer D-FINE model might do better but I leave this for exploration.

We can load our model with transformers.AutoModelForObjectDetection.from_pretrained and passing in the following parameters:

pretrained_model_name_or_path- Our target model, which can be a local path or Hugging Face model name (e.g.PekingU/rtdetr_v2_r50vd).label2id- A dictionary mapping our class names/labels to their numerical ID, this is so our model will know how many classes to output.id2label- A dictionary mapping numerical IDs to our class names/labels, so our model will know how many classes we’re working with and what their IDs are.ignore_mismatched_sizes=True(default) - We’ll set this toTrueso that our model can be instatiated with a varying number of classes compared to what it may have been trained on (e.g. if our model was trained on the 91 classes from COCO, we only need 7).

See the full documentation for a full list of parameters we can use.

Let’s create a model!

import warnings

warnings.filterwarnings("ignore", category=UserWarning, module="torch.nn.modules.module") # turn off warnings for loading the model (feel free to comment this if you want to see the warnings)

from transformers import AutoModelForObjectDetection

MODEL_NAME = "PekingU/rtdetr_v2_r50vd"

model = AutoModelForObjectDetection.from_pretrained(

pretrained_model_name_or_path=MODEL_NAME,

label2id=label2id,

id2label=id2label,

# Original model was trained with a different number of output classes to ours

# So we'll ignore any mismatched sizes (e.g. 91 vs. 7)

# Try turning this to False and see what happens

ignore_mismatched_sizes=True,

)

# Uncomment to see full model architecture

# modelSome weights of RTDetrV2ForObjectDetection were not initialized from the model checkpoint at PekingU/rtdetr_v2_r50vd and are newly initialized because the shapes did not match:

- model.decoder.class_embed.0.bias: found shape torch.Size([80]) in the checkpoint and torch.Size([7]) in the model instantiated

- model.decoder.class_embed.0.weight: found shape torch.Size([80, 256]) in the checkpoint and torch.Size([7, 256]) in the model instantiated

- model.decoder.class_embed.1.bias: found shape torch.Size([80]) in the checkpoint and torch.Size([7]) in the model instantiated

- model.decoder.class_embed.1.weight: found shape torch.Size([80, 256]) in the checkpoint and torch.Size([7, 256]) in the model instantiated

- model.decoder.class_embed.2.bias: found shape torch.Size([80]) in the checkpoint and torch.Size([7]) in the model instantiated

- model.decoder.class_embed.2.weight: found shape torch.Size([80, 256]) in the checkpoint and torch.Size([7, 256]) in the model instantiated

- model.decoder.class_embed.3.bias: found shape torch.Size([80]) in the checkpoint and torch.Size([7]) in the model instantiated

- model.decoder.class_embed.3.weight: found shape torch.Size([80, 256]) in the checkpoint and torch.Size([7, 256]) in the model instantiated

- model.decoder.class_embed.4.bias: found shape torch.Size([80]) in the checkpoint and torch.Size([7]) in the model instantiated

- model.decoder.class_embed.4.weight: found shape torch.Size([80, 256]) in the checkpoint and torch.Size([7, 256]) in the model instantiated

- model.decoder.class_embed.5.bias: found shape torch.Size([80]) in the checkpoint and torch.Size([7]) in the model instantiated

- model.decoder.class_embed.5.weight: found shape torch.Size([80, 256]) in the checkpoint and torch.Size([7, 256]) in the model instantiated

- model.denoising_class_embed.weight: found shape torch.Size([81, 256]) in the checkpoint and torch.Size([8, 256]) in the model instantiated

- model.enc_score_head.bias: found shape torch.Size([80]) in the checkpoint and torch.Size([7]) in the model instantiated

- model.enc_score_head.weight: found shape torch.Size([80, 256]) in the checkpoint and torch.Size([7, 256]) in the model instantiated

You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.Beautiful!

We’ve got a model ready.

You might’ve noticed a warning about the model needing to be trained on a down-stream task:

Some weights of RTDetrV2ForObjectDetection were not initialized from the model checkpoint at PekingU/rtdetr_v2_r50vd and are newly initialized because the shapes did not match: … - model.enc_score_head.bias: found shape torch.Size([80]) in the checkpoint and torch.Size([7]) in the model instantiated - model.enc_score_head.weight: found shape torch.Size([80, 256]) in the checkpoint and torch.Size([7, 256]) in the model instantiated

You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.

This is because our model has a different number of target classes (7 in total) comapred to the original model (91 in total, from the COCO dataset).

So in order to get this pretrained model to work on our dataset, we’ll need to fine-tune it.

You might also notice that if you set ignore_mismatched_sizes=False, you’ll get an error:

RuntimeError: Error(s) in loading state_dict for Linear: size mismatch for bias: copying a param with shape torch.Size([80]) from checkpoint, the shape in current model is torch.Size([7]).

This is a similar warning to the one above.

Keep this is mind for when you’re working with pretrained models.

If you are using data slightly different to what the model was trained on, you may need to alter the setup hyperparameters as well as fine-tune it on your own data.

6.3 Inspecting our model’s layers

We can inspect the full model architecture by running print(model) (I’ve commented this out for brevity).

And if you do so, you’ll see a large list of layers which combine to contribute to make the overall model.

The following subset of layers has been truncated for brevity.

# Shortened version of the model architecture, print the full model to see all layers

RTDetrV2ForObjectDetection(

(model): RTDetrV2Model(

(backbone): RTDetrV2ConvEncoder(

(model): RTDetrResNetBackbone(

(embedder): RTDetrResNetEmbeddings(

(embedder): Sequential(

(0): RTDetrResNetConvLayer(

(convolution): Conv2d(3, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(normalization): RTDetrV2FrozenBatchNorm2d()

(activation): ReLU()

)

... [Many layers here] ...

)

)

(class_embed): ModuleList(

(0-5): 6 x Linear(in_features=256, out_features=7, bias=True)

)

(bbox_embed): ModuleList(

(0-5): 6 x RTDetrV2MLPPredictionHead(

(layers): ModuleList(

(0-1): 2 x Linear(in_features=256, out_features=256, bias=True)

(2): Linear(in_features=256, out_features=4, bias=True)

)

)

)

)If we check out a few of our model’s layers, we can see that it is a combination of convolutional, attention, MLP (multi-layer perceptron) and linear layers.

I’ll leave exploring each of these layer types for extra-curriculum, you can see the source code for the model in the modeling_rt_detr_v2.py file on the transformers GitHub.

For now, think of them as progressively pattern extractors.

We’ll feed our input image into our model and layer by layer it will manipulate the pixel values to try and extract patterns in a way so that its internal parameters matches the image to its input annotations.

More specifically, if we dive into the final two layer sections:

class_embed= classification head without_features=7(one for each of our labels,'bin', 'hand', 'not_bin', 'not_hand', 'not_trash', 'trash', 'trash_arm']).bbox_embed= regression head without_features=4(one for each of our bbox coordinates, e.g.[center_x, center_y, width, height]).

print(f"[INFO] Final classification layer: {model.class_embed}\n")

print(f"[INFO] Final box regression layer: {model.bbox_embed}")[INFO] Final classification layer: ModuleList(

(0-5): 6 x Linear(in_features=256, out_features=7, bias=True)

)

[INFO] Final box regression layer: ModuleList(

(0-5): 6 x RTDetrV2MLPPredictionHead(

(layers): ModuleList(

(0-1): 2 x Linear(in_features=256, out_features=256, bias=True)

(2): Linear(in_features=256, out_features=4, bias=True)

)

)

)These two layers are what are going to output the final predictions of our model in structure similar to our annotations.

The class_embed will output the predicted class label of a given bounding box output from bbox_predictor.

In essence, we are trying to get all of the pretrained patterns (also called parameters/weights & biases) of the previous layers to conform to the ideal outputs we’d like at the end.

6.4 Counting the number of parameters in our model

Parameters are individual values which contribute to a model’s final output.

Parameters are also referred to as weights and biases.

You can think of these individual weights as small pushes and pulls on the input data to get it to match the input annotations.

If our weights were perfect, we could input an image and always get back the correct bounding boxes and class labels.

It’s very unlikely to ever have perfect weights (unless your dataset is very small) but we can make them quite good (and useful).

When you have a good set of weights, this is known as a good representation.

Right now, our weights have been trained on COCO, a collection of 91 different common objects.

So they have a fairly good representation of detecting general common objects, however, we’d like to fine-tune these weights to detect our target objects.

Importantly, our model will not be starting from scratch when it begins to train.

It will instead take off from its existing knowledge of detecting common objects in images and try to adhere to our task.

When it comes to parameters and weights, generally, more is better.

Meaning the more parameters your model has, the better representation it can learn.

For example, ResNet50 (our computer vision backbone) has ~25 million parameters, about 100 MB in float32 precision or 50MB in float16 precision.

Whereas a model such as Llama-3.1-405B has ~405 billion parameters, about 1.45 TB in float32 precision or 740 GB in float16 precision, about 16,000x more than ResNet50.

However, as we can see having more parameters comes with the tradeoff of size and latency.

For each new parameter requires to be stored and it also adds an extra computation unit to your model.

In the case of Trashify, since we’d like our model to run on-device (e.g. make predictions live on an iPhone), we’d opt for the smallest number of parameters we could get acceptable results from.

If performance is your number 1 criteria and size and latency don’t matter, then you’d likely opt for the model with the largest number of parameters (though always evaluate these models on your own data, larger models are generally better, not always better).

Since our model is built using PyTorch, let’s write a small function to count the number of:

- Trainable parameters (parameters which will be tweaked during training)

- Non-trainable parameters (parameters which will not be tweaked during training)

- Total parameters (trainable parameters + non-trainable parameters)

# Count the number of parameters in the model

def count_parameters(model):

"""Takes in a PyTorch model and returns the number of parameters."""

trainable_parameters = sum(p.numel() for p in model.parameters() if p.requires_grad)

non_trainable_parameters = sum(p.numel() for p in model.parameters() if not p.requires_grad)

total_parameters = sum(p.numel() for p in model.parameters())

print(f"Total parameters: {total_parameters:,}")

print(f"Trainable parameters (will be updated): {trainable_parameters:,}")

print(f"Non-trainable parameters (will not be updated): {non_trainable_parameters:,}")

count_parameters(model)Total parameters: 42,741,357

Trainable parameters (will be updated): 42,741,357

Non-trainable parameters (will not be updated): 0Cool!

It looks like our model has a total of 42,741,357 parameters, of which, most of them are trainable.

This means that when we fine-tune our model later on, we’ll be tweaking the majority of the parameters to try and represent our data.

In practice, this is known as full fine-tuning, trying to fine-tune a large portion of the model to our data.

There are other methods for fine-tuning, such as feature extraction (where you only fine-tune the final layers of the model) and partial fine-tuning (where you fine-tune a portion of the model).

And even methods such as LoRA (Low-Rank Adaptation) which fine-tunes an adaptor matrix as a compliment to the model’s parameters.

6.5 Creating a function to build our model

Since machine learning is very experimental, we may want to create multiple instances of our model to test various things.

So let’s functionize the creation of a new model with parameters for our target model name, id2label and label2id dictionaries.

from transformers import AutoModelForObjectDetection

# Setup the model

def create_model(pretrained_model_name_or_path: str = MODEL_NAME,

label2id: dict = label2id,

id2label: dict = id2label):

"""Creates and returns an instance of AutoModelForObjectDetection.

Args:

pretrained_model_name_or_path (str): The name or path of the pretrained model to load.

Defaults to MODEL_NAME.

label2id (dict): A dictionary mapping class labels to IDs. Defaults to label2id.

id2label (dict): A dictionary mapping class IDs to labels. Defaults to id2label.

Returns:

AutoModelForObjectDetection: A pretrained model for object detection with number of output

classes equivalent to len(label2id).

"""

model = AutoModelForObjectDetection.from_pretrained(

pretrained_model_name_or_path=pretrained_model_name_or_path,

label2id=label2id,

id2label=id2label,

ignore_mismatched_sizes=True, # default

)

return modelPerfect!

And to make sure our function works…

# Create a new model instance

model = create_model()

# modelSome weights of RTDetrV2ForObjectDetection were not initialized from the model checkpoint at PekingU/rtdetr_v2_r50vd and are newly initialized because the shapes did not match:

- model.decoder.class_embed.0.bias: found shape torch.Size([80]) in the checkpoint and torch.Size([7]) in the model instantiated

- model.decoder.class_embed.0.weight: found shape torch.Size([80, 256]) in the checkpoint and torch.Size([7, 256]) in the model instantiated

- model.decoder.class_embed.1.bias: found shape torch.Size([80]) in the checkpoint and torch.Size([7]) in the model instantiated

- model.decoder.class_embed.1.weight: found shape torch.Size([80, 256]) in the checkpoint and torch.Size([7, 256]) in the model instantiated

- model.decoder.class_embed.2.bias: found shape torch.Size([80]) in the checkpoint and torch.Size([7]) in the model instantiated

- model.decoder.class_embed.2.weight: found shape torch.Size([80, 256]) in the checkpoint and torch.Size([7, 256]) in the model instantiated

- model.decoder.class_embed.3.bias: found shape torch.Size([80]) in the checkpoint and torch.Size([7]) in the model instantiated

- model.decoder.class_embed.3.weight: found shape torch.Size([80, 256]) in the checkpoint and torch.Size([7, 256]) in the model instantiated

- model.decoder.class_embed.4.bias: found shape torch.Size([80]) in the checkpoint and torch.Size([7]) in the model instantiated

- model.decoder.class_embed.4.weight: found shape torch.Size([80, 256]) in the checkpoint and torch.Size([7, 256]) in the model instantiated

- model.decoder.class_embed.5.bias: found shape torch.Size([80]) in the checkpoint and torch.Size([7]) in the model instantiated

- model.decoder.class_embed.5.weight: found shape torch.Size([80, 256]) in the checkpoint and torch.Size([7, 256]) in the model instantiated

- model.denoising_class_embed.weight: found shape torch.Size([81, 256]) in the checkpoint and torch.Size([8, 256]) in the model instantiated

- model.enc_score_head.bias: found shape torch.Size([80]) in the checkpoint and torch.Size([7]) in the model instantiated

- model.enc_score_head.weight: found shape torch.Size([80, 256]) in the checkpoint and torch.Size([7, 256]) in the model instantiated

You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.6.6 Trying to pass a single sample through our model (part 1)

Okay, now we’ve got a model, let’s put some data through it!

When we call our model, because it’s a PyTorch Module (torch.nn.Module) it will by default run the forward method.

In PyTorch, forward overrides the special __call__ method on functions.

So we can pass data into our model by running:

model(input_data)Which is equivalent to running:

model.forward(input_data)To see what happens when we call our model, let’s inspect the forward method’s docstring with model.forward?.

# What happens when we call our model?

# Note: for PyTorch modules, `forward` overrides the __call__ method,

# so calling the model is equivalent to calling the forward method.

model.forward?Output of model.forward?

Signature:

model.forward(

pixel_values: torch.FloatTensor,

pixel_mask: Optional[torch.LongTensor] = None,

encoder_outputs: Optional[torch.FloatTensor] = None,

inputs_embeds: Optional[torch.FloatTensor] = None,

decoder_inputs_embeds: Optional[torch.FloatTensor] = None,

labels: Optional[List[dict]] = None,

output_attentions: Optional[bool] = None,

output_hidden_states: Optional[bool] = None,

return_dict: Optional[bool] = None,

**loss_kwargs,

) -> Union[Tuple[torch.FloatTensor], transformers.models.rt_detr_v2.modeling_rt_detr_v2.RTDetrV2ObjectDetectionOutput]

Docstring:

The [`RTDetrV2ForObjectDetection`] forward method, overrides the `__call__` special method.

<Tip>

Although the recipe for forward pass needs to be defined within this function, one should call the [`Module`]

instance afterwards instead of this since the former takes care of running the pre and post processing steps while

the latter silently ignores them.

</Tip>

Args:

pixel_values (`torch.FloatTensor` of shape `(batch_size, num_channels, image_size, image_size)`):

The tensors corresponding to the input images. Pixel values can be obtained using

[`{image_processor_class}`]. See [`{image_processor_class}.__call__`] for details ([`{processor_class}`] uses

[`{image_processor_class}`] for processing images).

pixel_mask (`torch.LongTensor` of shape `(batch_size, height, width)`, *optional*):

Mask to avoid performing attention on padding pixel values. Mask values selected in `[0, 1]`:

- 1 for pixels that are real (i.e. **not masked**),

- 0 for pixels that are padding (i.e. **masked**).

[What are attention masks?](../glossary#attention-mask)

encoder_outputs (`torch.FloatTensor`, *optional*):

Tuple consists of (`last_hidden_state`, *optional*: `hidden_states`, *optional*: `attentions`)

`last_hidden_state` of shape `(batch_size, sequence_length, hidden_size)`, *optional*) is a sequence of

hidden-states at the output of the last layer of the encoder. Used in the cross-attention of the decoder.

inputs_embeds (`torch.FloatTensor` of shape `(batch_size, sequence_length, hidden_size)`, *optional*):

Optionally, instead of passing the flattened feature map (output of the backbone + projection layer), you

can choose to directly pass a flattened representation of an image.

decoder_inputs_embeds (`torch.FloatTensor` of shape `(batch_size, num_queries, hidden_size)`, *optional*):

Optionally, instead of initializing the queries with a tensor of zeros, you can choose to directly pass an

embedded representation.

labels (`List[dict]` of len `(batch_size,)`, *optional*):

Labels for computing the bipartite matching loss. List of dicts, each dictionary containing at least the

following 2 keys: 'class_labels' and 'boxes' (the class labels and bounding boxes of an image in the batch

respectively). The class labels themselves should be a `torch.LongTensor` of len `(number of bounding boxes

in the image,)` and the boxes a `torch.FloatTensor` of shape `(number of bounding boxes in the image, 4)`.

output_attentions (`bool`, *optional*):

Whether or not to return the attentions tensors of all attention layers. See `attentions` under returned

tensors for more detail.

output_hidden_states (`bool`, *optional*):

Whether or not to return the hidden states of all layers. See `hidden_states` under returned tensors for

more detail.

return_dict (`bool`, *optional*):

Whether or not to return a [`~utils.ModelOutput`] instead of a plain tuple.

Returns:

[`transformers.models.rt_detr_v2.modeling_rt_detr_v2.RTDetrV2ObjectDetectionOutput`] or `tuple(torch.FloatTensor)`: A [`transformers.models.rt_detr_v2.modeling_rt_detr_v2.RTDetrV2ObjectDetectionOutput`] or a tuple of

`torch.FloatTensor` (if `return_dict=False` is passed or when `config.return_dict=False`) comprising various

elements depending on the configuration ([`RTDetrV2Config`]) and inputs.

- **loss** (`torch.FloatTensor` of shape `(1,)`, *optional*, returned when `labels` are provided)) -- Total loss as a linear combination of a negative log-likehood (cross-entropy) for class prediction and a

bounding box loss. The latter is defined as a linear combination of the L1 loss and the generalized

scale-invariant IoU loss.

- **loss_dict** (`Dict`, *optional*) -- A dictionary containing the individual losses. Useful for logging.

- **logits** (`torch.FloatTensor` of shape `(batch_size, num_queries, num_classes + 1)`) -- Classification logits (including no-object) for all queries.

- **pred_boxes** (`torch.FloatTensor` of shape `(batch_size, num_queries, 4)`) -- Normalized boxes coordinates for all queries, represented as (center_x, center_y, width, height). These

values are normalized in [0, 1], relative to the size of each individual image in the batch (disregarding

possible padding). You can use [`~RTDetrV2ImageProcessor.post_process_object_detection`] to retrieve the

unnormalized (absolute) bounding boxes.

- **auxiliary_outputs** (`list[Dict]`, *optional*) -- Optional, only returned when auxiliary losses are activated (i.e. `config.auxiliary_loss` is set to `True`)

and labels are provided. It is a list of dictionaries containing the two above keys (`logits` and

`pred_boxes`) for each decoder layer.

- **last_hidden_state** (`torch.FloatTensor` of shape `(batch_size, num_queries, hidden_size)`) -- Sequence of hidden-states at the output of the last layer of the decoder of the model.

- **intermediate_hidden_states** (`torch.FloatTensor` of shape `(batch_size, config.decoder_layers, num_queries, hidden_size)`) -- Stacked intermediate hidden states (output of each layer of the decoder).

- **intermediate_logits** (`torch.FloatTensor` of shape `(batch_size, config.decoder_layers, num_queries, config.num_labels)`) -- Stacked intermediate logits (logits of each layer of the decoder).

- **intermediate_reference_points** (`torch.FloatTensor` of shape `(batch_size, config.decoder_layers, num_queries, 4)`) -- Stacked intermediate reference points (reference points of each layer of the decoder).

- **intermediate_predicted_corners** (`torch.FloatTensor` of shape `(batch_size, config.decoder_layers, num_queries, 4)`) -- Stacked intermediate predicted corners (predicted corners of each layer of the decoder).

- **initial_reference_points** (`torch.FloatTensor` of shape `(batch_size, config.decoder_layers, num_queries, 4)`) -- Stacked initial reference points (initial reference points of each layer of the decoder).

- **decoder_hidden_states** (`tuple(torch.FloatTensor)`, *optional*, returned when `output_hidden_states=True` is passed or when `config.output_hidden_states=True`) -- Tuple of `torch.FloatTensor` (one for the output of the embeddings + one for the output of each layer) of

shape `(batch_size, num_queries, hidden_size)`. Hidden-states of the decoder at the output of each layer

plus the initial embedding outputs.

- **decoder_attentions** (`tuple(torch.FloatTensor)`, *optional*, returned when `output_attentions=True` is passed or when `config.output_attentions=True`) -- Tuple of `torch.FloatTensor` (one for each layer) of shape `(batch_size, num_heads, num_queries,

num_queries)`. Attentions weights of the decoder, after the attention softmax, used to compute the weighted

average in the self-attention heads.

- **cross_attentions** (`tuple(torch.FloatTensor)`, *optional*, returned when `output_attentions=True` is passed or when `config.output_attentions=True`) -- Tuple of `torch.FloatTensor` (one for each layer) of shape `(batch_size, num_queries, num_heads, 4, 4)`.

Attentions weights of the decoder's cross-attention layer, after the attention softmax, used to compute the

weighted average in the cross-attention heads.

- **encoder_last_hidden_state** (`torch.FloatTensor` of shape `(batch_size, sequence_length, hidden_size)`, *optional*) -- Sequence of hidden-states at the output of the last layer of the encoder of the model.

- **encoder_hidden_states** (`tuple(torch.FloatTensor)`, *optional*, returned when `output_hidden_states=True` is passed or when `config.output_hidden_states=True`) -- Tuple of `torch.FloatTensor` (one for the output of the embeddings + one for the output of each layer) of

shape `(batch_size, sequence_length, hidden_size)`. Hidden-states of the encoder at the output of each

layer plus the initial embedding outputs.

- **encoder_attentions** (`tuple(torch.FloatTensor)`, *optional*, returned when `output_attentions=True` is passed or when `config.output_attentions=True`) -- Tuple of `torch.FloatTensor` (one for each layer) of shape `(batch_size, num_queries, num_heads, 4, 4)`.

Attentions weights of the encoder, after the attention softmax, used to compute the weighted average in the

self-attention heads.

- **init_reference_points** (`torch.FloatTensor` of shape `(batch_size, num_queries, 4)`) -- Initial reference points sent through the Transformer decoder.

- **enc_topk_logits** (`torch.FloatTensor` of shape `(batch_size, sequence_length, config.num_labels)`, *optional*, returned when `config.with_box_refine=True` and `config.two_stage=True`) -- Logits of predicted bounding boxes coordinates in the encoder.

- **enc_topk_bboxes** (`torch.FloatTensor` of shape `(batch_size, sequence_length, 4)`, *optional*, returned when `config.with_box_refine=True` and `config.two_stage=True`) -- Logits of predicted bounding boxes coordinates in the encoder.

- **enc_outputs_class** (`torch.FloatTensor` of shape `(batch_size, sequence_length, config.num_labels)`, *optional*, returned when `config.with_box_refine=True` and `config.two_stage=True`) -- Predicted bounding boxes scores where the top `config.two_stage_num_proposals` scoring bounding boxes are

picked as region proposals in the first stage. Output of bounding box binary classification (i.e.

foreground and background).

- **enc_outputs_coord_logits** (`torch.FloatTensor` of shape `(batch_size, sequence_length, 4)`, *optional*, returned when `config.with_box_refine=True` and `config.two_stage=True`) -- Logits of predicted bounding boxes coordinates in the first stage.

- **denoising_meta_values** (`dict`) -- Extra dictionary for the denoising related values

Examples:

```python

>>> from transformers import RTDetrV2ImageProcessor, RTDetrV2ForObjectDetection

>>> from PIL import Image

>>> import requests

>>> import torch

>>> url = "http://images.cocodataset.org/val2017/000000039769.jpg"

>>> image = Image.open(requests.get(url, stream=True).raw)

>>> image_processor = RTDetrV2ImageProcessor.from_pretrained("PekingU/RTDetrV2_r50vd")

>>> model = RTDetrV2ForObjectDetection.from_pretrained("PekingU/RTDetrV2_r50vd")

>>> # prepare image for the model

>>> inputs = image_processor(images=image, return_tensors="pt")

>>> # forward pass

>>> outputs = model(**inputs)

>>> logits = outputs.logits

>>> list(logits.shape)

[1, 300, 80]

>>> boxes = outputs.pred_boxes

>>> list(boxes.shape)

[1, 300, 4]

>>> # convert outputs (bounding boxes and class logits) to Pascal VOC format (xmin, ymin, xmax, ymax)

>>> target_sizes = torch.tensor([image.size[::-1]])

>>> results = image_processor.post_process_object_detection(outputs, threshold=0.9, target_sizes=target_sizes)[

... 0

... ]

>>> for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

... box = [round(i, 2) for i in box.tolist()]

... print(

... f"Detected {model.config.id2label[label.item()]} with confidence "

... f"{round(score.item(), 3)} at location {box}"

... )

Detected sofa with confidence 0.97 at location [0.14, 0.38, 640.13, 476.21]

Detected cat with confidence 0.96 at location [343.38, 24.28, 640.14, 371.5]

Detected cat with confidence 0.958 at location [13.23, 54.18, 318.98, 472.22]

Detected remote with confidence 0.951 at location [40.11, 73.44, 175.96, 118.48]

Detected remote with confidence 0.924 at location [333.73, 76.58, 369.97, 186.99]File: ~/miniconda3/envs/ai/lib/python3.11/site-packages/transformers/models/rt_detr_v2/modeling_rt_detr_v2.py Type: method

Running model.forward? we can see that our model wants to take in pixel_values as well as a pixel_mask as arguments.

What happens if we try to pass in a single image from our random_sample?

Let’s try!

It’s good practice to try and pass a single sample through your model as soon as possible to see what happens.

If we’re lucky, it’ll work.

If we’re really lucky, we’ll get an error message saying why it didn’t work (this is usually the case because rarely does raw data flow through a model without being preprocessed first).

We’ll do so by setting pixel_values to our random_sample["image"] and pixel_mask=None.

# Do a single forward pass with the model

random_sample_outputs = model(pixel_values=random_sample["image"],

pixel_mask=None)

random_sample_outputsOutput of random_sample_outputs

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

Cell In[21], line 3

1 #| output: false

2 # Do a single forward pass with the model

----> 3 random_sample_outputs = model(pixel_values=random_sample["image"],

4 pixel_mask=None)

5 random_sample_outputs

File ~/miniconda3/envs/ai/lib/python3.11/site-packages/torch/nn/modules/module.py:1751, in Module._wrapped_call_impl(self, *args, **kwargs)

1749 return self._compiled_call_impl(*args, **kwargs) # type: ignore[misc]

1750 else:

-> 1751 return self._call_impl(*args, **kwargs)

File ~/miniconda3/envs/ai/lib/python3.11/site-packages/torch/nn/modules/module.py:1762, in Module._call_impl(self, *args, **kwargs)

1757 # If we don't have any hooks, we want to skip the rest of the logic in

1758 # this function, and just call forward.

1759 if not (self._backward_hooks or self._backward_pre_hooks or self._forward_hooks or self._forward_pre_hooks

1760 or _global_backward_pre_hooks or _global_backward_hooks

1761 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1762 return forward_call(*args, **kwargs)

1764 result = None

1765 called_always_called_hooks = set()

File ~/miniconda3/envs/ai/lib/python3.11/site-packages/transformers/models/rt_detr_v2/modeling_rt_detr_v2.py:1967, in RTDetrV2ForObjectDetection.forward(self, pixel_values, pixel_mask, encoder_outputs, inputs_embeds, decoder_inputs_embeds, labels, output_attentions, output_hidden_states, return_dict, **loss_kwargs)

1962 output_hidden_states = (

1963 output_hidden_states if output_hidden_states is not None else self.config.output_hidden_states

1964 )

1965 return_dict = return_dict if return_dict is not None else self.config.use_return_dict

-> 1967 outputs = self.model(

1968 pixel_values,

1969 pixel_mask=pixel_mask,

1970 encoder_outputs=encoder_outputs,

1971 inputs_embeds=inputs_embeds,

1972 decoder_inputs_embeds=decoder_inputs_embeds,

1973 labels=labels,

1974 output_attentions=output_attentions,

1975 output_hidden_states=output_hidden_states,

1976 return_dict=return_dict,

1977 )

1979 denoising_meta_values = (

1980 outputs.denoising_meta_values if return_dict else outputs[-1] if self.training else None

1981 )

1983 outputs_class = outputs.intermediate_logits if return_dict else outputs[2]

File ~/miniconda3/envs/ai/lib/python3.11/site-packages/torch/nn/modules/module.py:1751, in Module._wrapped_call_impl(self, *args, **kwargs)

1749 return self._compiled_call_impl(*args, **kwargs) # type: ignore[misc]

1750 else:

-> 1751 return self._call_impl(*args, **kwargs)

File ~/miniconda3/envs/ai/lib/python3.11/site-packages/torch/nn/modules/module.py:1762, in Module._call_impl(self, *args, **kwargs)

1757 # If we don't have any hooks, we want to skip the rest of the logic in

1758 # this function, and just call forward.

1759 if not (self._backward_hooks or self._backward_pre_hooks or self._forward_hooks or self._forward_pre_hooks

1760 or _global_backward_pre_hooks or _global_backward_hooks

1761 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1762 return forward_call(*args, **kwargs)

1764 result = None

1765 called_always_called_hooks = set()

File ~/miniconda3/envs/ai/lib/python3.11/site-packages/transformers/models/rt_detr_v2/modeling_rt_detr_v2.py:1658, in RTDetrV2Model.forward(self, pixel_values, pixel_mask, encoder_outputs, inputs_embeds, decoder_inputs_embeds, labels, output_attentions, output_hidden_states, return_dict)

1653 output_hidden_states = (

1654 output_hidden_states if output_hidden_states is not None else self.config.output_hidden_states

1655 )

1656 return_dict = return_dict if return_dict is not None else self.config.use_return_dict

-> 1658 batch_size, num_channels, height, width = pixel_values.shape

1659 device = pixel_values.device

1661 if pixel_mask is None:

AttributeError: 'Image' object has no attribute 'shape'Oh no!… I mean… Oh, yes!

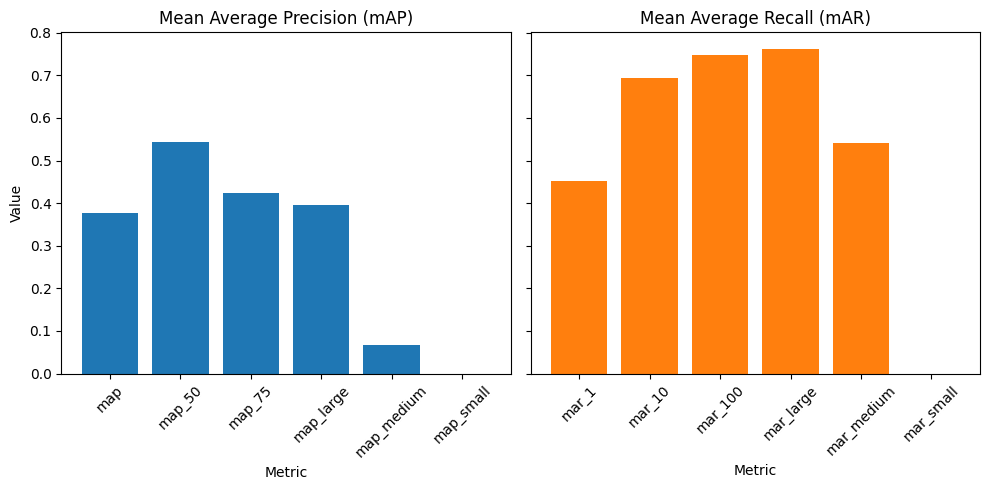

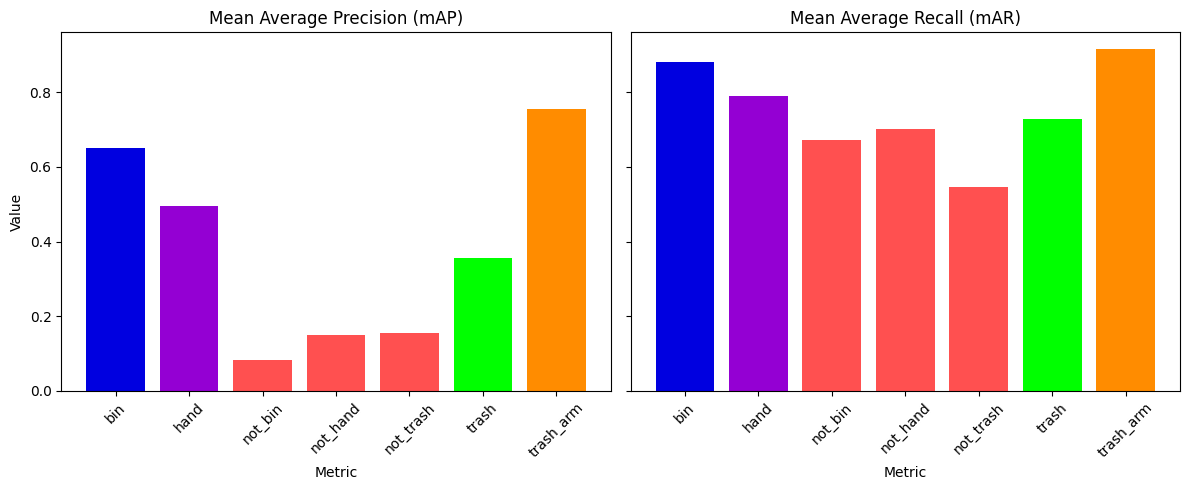

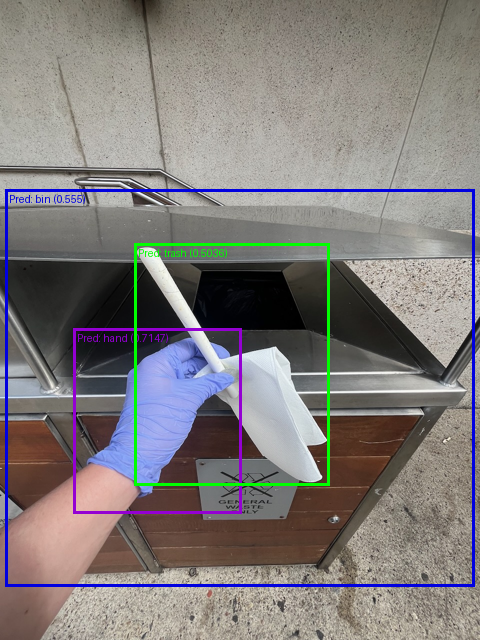

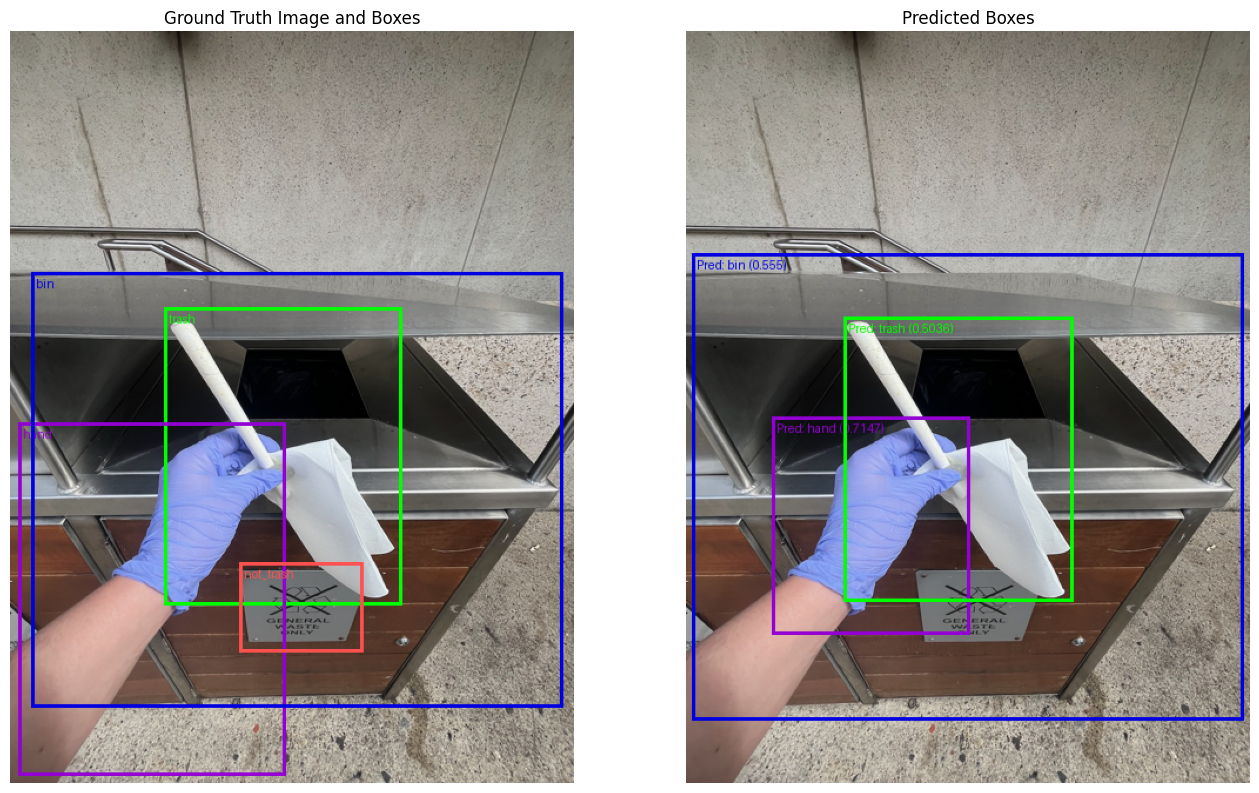

We get an error: